“AI doesn’t replace judgment. It sharpens it.”

—Douglas E. Anderson, DHA, MBA, FACHE, Founder of SHELDR.com

Table of Contents

Author’s Note: As a former military healthcare executive, I incorporate my observations and experiences into research, coaching, and publishing on AI in the health and human services sectors to help organizations be more efficient, effective, and authentic. Also, the Military Health System (MHS) Digital Transformation Strategy has been published for general consumption.

Executive Summary: Recent sloppy and biased reporting on Major General William “Hank” Taylor’s comments about using AI in the military has made it seem like he was trying to do something bad with ChatGPT, and that the Department of Defense has been strict about AI since 2022. This commentary critiques the misleading narrative, analyzes journalistic bias and omissions, and proposes additional learning opportunities or more responsible coverage and understanding of AI-assisted decision-making in defense and health leadership.

The Problem with Sensationalism

Misleading titles, disingenuous framing, and selective omission. Those three elements define how too many tech stories are written today—and the Yahoo article on Major General Taylor’s comments is a textbook case.

The article—“Top U.S. Army general says troops should use ChatGPT to help make decisions”—implies the Army is outsourcing command authority (or CEO) and decision-making to a chatbot. That’s not true, not responsible, and not remotely what Taylor said. His remarks, in full context, referenced AI as a decision-support tool, not an autonomous commander (or CEO). The difference is profound. Yet the article flattened nuance into a clickbait headline designed to inflame anxiety and boost engagement.

Q-That’s not true, not responsible, and not even close to what Taylor said. In full context, his comments referred to AI as a tool for helping people make decisions, not as a commander or CEO. There is a big difference. But the article turned nuance into a clickbait headline meant to make people anxious and get them to read more.

Having spent decades leading, advising, and training decision-makers in complex systems, I recognize this for what it is: another example of narrative distortion that undermines public trust in both leadership and technology.

Beware.

Article Critique

Overview: The story sensationalizes a responsible statement about innovation. It exaggerates Taylor’s remarks, ignores context, and omits crucial discussion of oversight. Readers are left believing the military is experimenting recklessly with artificial intelligence, when the Department of Defense is one of the few institutions globally enforcing formal AI governance and ethical frameworks.

Strengths: The headline grabs attention—no question there. It touches a cultural nerve about AI’s growing role in life-and-death decision-making. It uses direct quotes and draws on public sentiment. From a purely journalistic standpoint, it’s clickable, current, and emotionally charged.

But that’s where the strengths end.

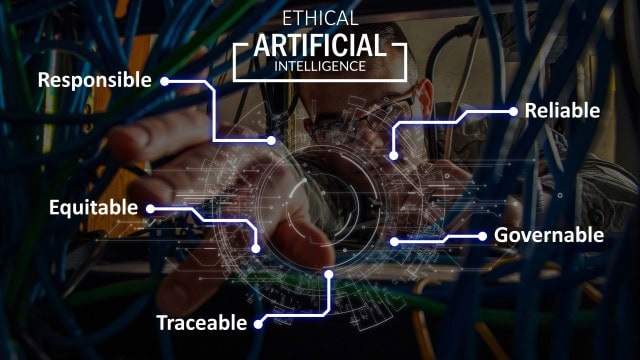

Weaknesses: The framing—“letting ChatGPT make military decisions”—is inaccurate and irresponsible. The article lacks corroboration from the DoD, the 8th Field Army, or OpenAI. It ignores established oversight processes such as the DoD’s Responsible AI Principles and Directive 3000.09, which explicitly require meaningful human control over AI systems. DoD’s Responsible AI Principles are:

- Responsible: Personnel must exercise appropriate judgment and care.

- Equitable: Deliberate steps are taken to minimize unintended bias.

- Traceable: AI capabilities are developed and deployed using transparent and auditable methodologies.

- Reliable: AI capabilities have explicit, well-defined uses.

The article also overstates risks. It collapses legitimate conversation about decision augmentation into unsubstantiated moral panic. In the absence of balance, it becomes rhetoric, not reporting.

Bias and Omission

The author’s bias leans toward fear and distrust of technology. The framing suggests moral collapse rather than disciplined modernization. By omitting the DoD’s ethical principles—responsibility, equitability, traceability, reliability, and governability—the article erases existing safeguards that protect human oversight as if the military and General are misusing and abusing AI and ChatGPT. Nothing could be further from the truth.

The result? A narrative of recklessness that doesn’t exist. The selective use of anecdotes—mental health crises, user backlash—distorts proportionality and conflates separate issues.

The article manipulates public emotion while ignoring structure, process, and governance.

From an editorial perspective, the article flows smoothly but prioritizes drama over precision.

The article plays with people’s feelings while ignoring structure, process, and governance.

From an editorial standpoint, the article flows well, but it prioritizes drama over accuracy.

Its transitions are abrupt—especially between General Taylor’s comments and unrelated anecdotes like AI “suicide.” The final paragraph collapses into speculation. The structure serves outrage, not education. My editorial grade: C–. Readable and provocative but deficient in balance, context, and factual grounding.

Learning Opportunities For Readers

Gaps or omissions matter. Gaps and omissions shape public fear, influence policy, and erode informed debate about AI’s legitimate, governed, and often lifesaving uses. The author could have shaped the article, but did not. These gaps represent learning opportunities for the leader.

- Governance Mechanisms: No mention of how AI decision-support tools are integrated, supervised, or audited within military command structures.

- Training and Competency: The author omits AI literacy initiatives and responsible-use protocols currently integrated into professional military education.

- Comparative International Context: There is no mention of how U.S. AI doctrine differs from that of NATO allies or adversarial systems such as China’s PLA, where human oversight is much less stringent.

- Operational Data Integrity: The article doesn’t explore how AI models are validated, secured, or aligned with classified decision frameworks.

- Media Accountability: Absent is any reflection on journalism’s role in shaping—and often distorting—the public’s understanding of AI risk using anecdotes.

In my experience, the military health system follows DoD policy and guidance. AI is not different. Recently, the Defense Health Agency (DHA) published the digital health and AI strategy, outlined in the Military Health System (MHS) Digital Transformation Strategy, which focuses on four main lines of effort and embraces DoD policy and guidance on responsible use of AI.

Their goal is to improve operational readiness, enhance patient care, and increase efficiency by integrating modern technology throughout the MHS.

Re-frame the Truth

Here’s what the General said—and what every responsible commander (CEO) understands. AI is an assistant. It helps check biases, stress-test assumptions, and accelerate planning cycles. It can summarize intelligence reports, simulate outcomes, and expose vulnerabilities faster than a single human staff member. But AI does not decide. Humans do.

Commanders are accountable. Always. Every AI system used in the military is governed, logged, reviewed, and overseen by human operators. AI helps shorten the OODA Loop—Observe, Orient, Decide, Act—without removing human morality or accountability.

More:

- ChatGPT and the 15 Decision Tools: Enhancing Health Leadership Decisions (Part 1/2)

- ChatGPT and the 15 Decision Tools: Enhancing Health Leadership Decisions (Part 2/2)

This is the same philosophy adopted by the American Medical Association (AMA), American College of Healthcare Executives (ACHE), American Nurses Association (ANA), and others (see Attachment), which explicitly defines AI as an augmentative, not autonomous, capability. The goal across sectors—military, health, or emergency response—is not replacement. It’s responsible for reinforcement. The Attachment provides a list of associations and organizations on the AI policy positions.

Become a Savvy Reader

Here’s how informed readers can protect themselves from manipulation:

- Look for what’s missing. If an article about AI never mentions “governance,” “oversight,” or “human control,” you’re reading opinion, not analysis.

- Check sources. Credible stories quote both technical and ethical authorities, not just isolated remarks.

- Assess motive. If the tone triggers fear before understanding, it’s probably engineered too.

- Distinguish emotion from evidence. Outrage is not proof.

Being media-savvy is as critical to modern citizenship as digital literacy.

AI is Not the Devil! Responsible Dialogue and Leading By Example

To move beyond sensationalism to responsible AI, we need disciplined research and informed discussion. AI is here to stay. AI is not the Devil, as Mama Boucher would say in the movie, Waterboy. Becoming a responsible commander, leader, or healthcare executive should center on leading by Example by embracing AI to be more efficient, effective, and authentic. Here are a few future directions for responsible inquiry.

- Lead by Example in the AI Era: Learning and embracing AI and using ChatGPT as a personal virtual coach to be a more efficient, effective, and authentic leader.

- AI Literacy in Uniform: Building Ethical Competence Among Military Leaders. Explore how leader development and education programs can integrate responsible AI into leadership curricula.

- Human-in-the-Loop Command: Rethinking AI Decision Support in Military Leadership: Examines governance, oversight, and accountability in AI-assisted command decisions.

- From Command Post to Console: The Psychology of Trust in AI-Augmented Leadership: Investigate how leaders can calibrate confidence in AI tools under pressure.

- Algorithmic Accountability in National Defense: Lessons for Civilian Health Systems: Compare AI governance and adoption in defense and healthcare to identify shared challenges.

- The Media’s Role in AI Fear Amplification: A Call for Responsible Reporting: Analyze how sensational AI headlines distort policymaking and public understanding.

These topics shift the conversation from paranoia to purpose—anchoring AI discourse in systems, ethics, and leadership responsibility.

Final Thought

The Yahoo story isn’t just a bad headline. It’s a warning about what happens when journalism trades precision for provocation, fear, and anxiety. Major General Taylor’s comments were about discipline, not delegation, augmentation, or abdication. Yet in the race for clicks, his message was twisted into a caricature of recklessness. Don’t let it happen to you.

The truth is more straightforward: The military is not handing decision-making over to ChatGPT. They are enhancing them. It’s testing how AI can strengthen human decision-making—under supervision, within law, and with ethical guardrails firmly in place.

Responsible innovation is happening. What’s missing is responsible reporting.

AI is not the Devil! AI doesn’t threaten good leadership; it magnifies it—when guided by judgment, governance, and integrity first.

Douglas E. Anderson, DHA, MBA, FACHE — Colonel (Ret), USAF | Strategic Health Leader | AI & Systems Thinking Advocate, Founder of SHELDR.com — advancing responsible AI adoption across the Health and Human Services sectors to build stronger leaders, healthier systems, and more resilient communities. Disclosure: ChatGPT, Grammarly, and QuillBot assisted in the development of this article.

Attachment: Health And Human Services Associations With AI Policies

AI is rewriting the rules across health and human services, pushing leaders to build more innovative policies or risk falling behind. From hospitals and public health agencies to social care and behavioral health, top associations have laid down positions on ethics, transparency, fairness, and oversight. This isn’t academic. It’s the frontline playbook for how AI must be governed, deployed, and kept accountable—before your organization becomes the following cautionary headline.

Healthcare and medical:

- American Medical Association (AMA): Emphasizes the need for physician involvement in the development and implementation of AI technologies, recommends government regulation; adopted policies aimed at ensuring transparency in AI tools.

- American College of Healthcare Executives (ACHE): Recognizes that Artificial Intelligence (AI) has much promise to change how healthcare is delivered and managed. Their stance emphasizes a balanced, responsible approach to AI, highlighting both its benefits and the moral issues it raises.

- American Nurses Association (ANA): Focuses on the ethical use of AI in nursing practice, emphasizing the importance of nurses maintaining human interaction and relationships, and staying informed to educate patients and families about AI.

- American Hospital Association (AHA): Recognizes AI’s potential to improve value-based care and reduce administrative burden, while also emphasizing the need to build trust and address challenges related to AI implementation. Provides an AI Action Plan.

- Healthcare Information and Management Systems Society (HIMSS): Provides global advocacy, research, and guidance on AI policy through its Global AI Policy Principles, focusing on patient safety, quality of care, accountability, and transparency.

- National Academy of Medicine (NAM): Developed the Artificial Intelligence Code of Conduct (AICC) project, which aims to provide a guiding framework for ensuring AI algorithms are accurate, safe, reliable, and ethical in health and medicine.

- The Joint Commission (JCAHO): Collaborating with the Coalition for Health AI (CHAI) to scale the responsible use of AI in healthcare, providing guidance, tools, and certification to accelerate innovation and mitigate risks.

Public Health:

- Association of Schools and Programs of Public Health (ASPPH): Established a Task Force for the Responsible and Ethical Use of AI in Public Health, focusing on education, research, practice, and policy.

- National Association of County and City Health Officials (NACCHO): While acknowledging the early stages of adoption, NACCHO highlights public health organizations’ interest in AI for tasks such as creating communication materials and plans.

Mental and behavioral health:

- American Psychological Association (APA): Provides ethical guidance for AI in professional practice, including transparency, informed consent, bias mitigation, data privacy, accuracy, human oversight, and liability.

- Anxiety and Depression Association of America (ADAA): Focuses on integrating AI into mental health practices, exploring opportunities in patient, provider, and payer ecosystems, and emphasizing the importance of ethical considerations and human oversight.

- The National Council for Mental Wellbeing: Partners with companies like Eleos Health to increase clinician access to AI education, research, and provider-centric tools.

Social care and human services:

- Social Current emphasizes building trust, leveraging AI as an assistant, upskilling employees, and implementing AI ethically within human service organizations through robust governance frameworks.

- APHSA (American Public Human Services Association): Views AI as a promising tool to support human efforts and enhance human services, while stressing the need for a deep understanding of current technology and principles for re-engineering systems.

Cross-sector/general AI in health:

- Coalition for Health AI (CHAI): An interdisciplinary group (including technologists, academic researchers, health systems, government, and patient advocates) focused on developing guidelines and guardrails for trustworthy health AI systems.

- Digital Medicine Society (DiMe): Develops guidelines for the use of AI in healthcare.

- Partnership on AI: Brings together people from different backgrounds to talk about the problems and challenges that AI presents.

- Several organizations and associations are dedicated to Artificial Intelligence (AI) and Optical Character Recognition (OCR), either individually or at their intersection.

This list includes some of the most critical groups currently shaping the discussion and rules around AI in healthcare and human services. We need to keep in mind that this area is changing quickly, with new regulations and groups coming up all the time.

Leading health, public health, social care, and behavioral organizations now enforce explicit AI policies on ethics, transparency, and human oversight. Their frameworks are shaping national norms. Ignore them, and your organization risks compliance failures and public backlash. Master them, and you lead with integrity, trust, and sharper outcomes.