Is your AI strategy is simply automating the same ineffective workflows?

Health leaders should recognize that only leadership can address systemic dysfunction; AI alone will not resolve these challenges.

Executive Summary: Generative AI highlights existing dysfunction in the Health and Human Services sectors. Various reports indicate a low percentage of healthcare and Human Services (HHS) organizations have scaled AI solutions beyond the pilot phase, which is often linked to challenges with leadership, governance, data quality, and workflow integration. Sustainable change needs disciplined AI management, not quick fixes. See the BONUS!

Move Beyond the AI Narrative: Data Quality Is Essential

Many health leaders hope AI will provide a transformative solution. Generative AI is revealing the extent of existing system failures. While the U.S. spent $4.9 trillion on healthcare in 2023, CMS projects that annual national health expenditures will reach approximately $5.6 trillion in 2025. Studies show the U.S. has the lowest life expectancy and highest rate of preventable and treatable deaths compared to its peers. These outcomes are linked to factors such as high healthcare costs, barriers to care, and socioeconomic conditions.

AI cannot compensate for such deep-rooted dysfunction.

McKinsey’s 2024 report revealed that fewer than 15% of HHS organizations have scaled Generative AI beyond pilot projects. Most are still trying to connect EHRs that don’t talk to each other, share data across incompatible platforms, or measure outcomes beyond compliance checklists. CDC’s 2025 Digital Health Readiness Assessment found that over half of state health departments lack the capacity to integrate real-time data.

The primary challenge is not lack of vision but deteriorating infrastructure. AI is being introduced into systems constrained by outdated policies, inconsistent governance, and workforce fatigue. The sector requires decisive leadership, not just new algorithms.

Ask yourself this: Would you trust a clinical AI model that’s trained on incomplete, biased, or outdated data—knowing it’s guiding decisions about your community?

AI Will Not Succeed Without Addressing Culture

Health executives often prioritize technology purchases over addressing organizational culture. Successful generative AI adoption requires leadership and courage, as trust, empathy, and team morale cannot be automated. Don’t allow an AI-powered algorithm to value “efficiency” over equity. Or your workforce will revolt.

Nebraska Medicine faced high first-year nurse turnover, driven by burnout, misaligned placements, and the stress of early clinical transitions. To address this, the organization partnered with an AI-driven workforce analytics platform that evaluated personality traits, communication styles, and behavioral strengths to improve nurse-unit matching. By aligning new nurses with teams and units where their profiles best fit, Nebraska Medicine improved retention, engagement, and overall satisfaction.

The initiative cut first-year nurse turnover by almost 50%, saving millions in recruitment and training costs. Leaders emphasized that AI didn’t replace human judgment—it augmented it by providing data-backed insights that strengthened mentorship, team cohesion, and culture alignment. The project demonstrated that AI-powered workforce intelligence—when integrated with leadership trust and onboarding redesign—can make healthcare organizations more stable, resilient, and responsive to staff well-being.

For Health and Human Services (HHS) executives, this illustrates how AI-enabled workforce analytics can serve as both a recruitment and retention engine—especially amid national shortages. The lesson: use AI to guide placement, mentorship, and leadership development, not to replace human judgment. When data meets empathy and organizational trust, technology becomes a leadership amplifier—not a workforce threat.

Organizational culture is more important than technology. AI initiatives fail when leaders delegate responsibility solely to IT rather than integrating AI into operational design. The Veterans Health Administration (VHA) has a broad strategy for adopting AI, which includes several pilots. Their approach focuses on collaboration and augmentation, not just automation.

AI adoption demands leaders who can listen harder than they automate. To effectively implement leadership and communication, holding regular staff listening sessions can be essential. These sessions create an environment where team members can openly voice their ideas and challenges. Creating clear feedback loops and giving credit to team members for their work promotes a culture of working together. Leaders can also make decisions with empathy, putting their employees’ health and happiness first, which will make the workplace more balanced and welcoming.

Why Public Health Continues to Lag in AI Adoption

Public health should have been the first sector to dominate AI—and yet it’s years behind. CDC’s Data Modernization Initiative exposed the reason: fragmented infrastructure, underfunded staff, and archaic procurement rules. Only 14 states report using any AI-enabled analytics for outbreak forecasting or population risk mapping.

Local agencies are worse off. Rural health departments still track opioid overdoses with spreadsheets. Social service agencies still fax referrals to food banks. Behavioral health centers—especially in low-income counties—lack basic EHR interoperability.

AI could revolutionize coordination between hospitals, Medicaid agencies, and social service networks, but not when 22% of public health jobs remain vacant. Technology can’t fill leadership gaps. It only magnifies them.

Here’s the uncomfortable truth: if your team can’t manage yesterday’s data, it won’t survive tomorrow’s AI-driven systems. Medicaid data remains splintered across 56 jurisdictions. Hospital EHRs still operate like medieval fortresses. Social services data lives in siloed case management tools that can’t communicate with health exchanges.

The CMS 2023 Medicaid Interoperability Audit found that fewer than 40% of states could reconcile eligibility, claims, and provider data within a single quarter. That’s not a technology gap—it’s a governance failure.

Poor data quality undermines AI effectiveness. In 2022, a predictive algorithm used by a large insurer underestimated care needs for Black patients by nearly 45% due to biased training data. Such errors not only distort predictions but also put lives at risk.

Data governance must be treated as a clinical function, not an IT chore. Every CIO should meet weekly with the CMO and Chief Equity Officer to review AI output variance by race, geography, and socioeconomic class. Leadership accountability must move upstream.

Automation Without Oversight Amplifies Bias

AI doesn’t fix inequality; it industrializes it. When algorithms drive eligibility, referrals, or funding allocation, they inherit the same inequities built into the data.

Many studies such as Stanford’s 2024 AI in Child Welfare study found that natural language models were more likely to flag low-income or minority families for risk—even when their cases matched white peers word-for-word. In healthcare, predictive models often over-prioritize urban patients with higher system contact, leaving rural and undocumented populations invisible.

Ethical governance isn’t a compliance checkbox—it’s a moral firewall. Every HHS organization should maintain a standing AI Ethics and Community Oversight Board composed of patient advocates, social service representatives, and data scientists.

Your organizations cannot claim to prioritize equity if their algorithms perpetuate disparities in access to care. Proactively audit for bias to mitigate legal and ethical risks.

Overpromising the benefits of AI can be misleading. Vendors may highlight automation and high returns, but implementation failures can result in significant costs, including lost trust and retraining expenses.

Leadership—not algorithms—determines AI success. North Carolina’s Healthy Opportunities Pilot integrated AI-driven data analytics to connect Medicaid recipients to food, housing, and transportation. The secret? Shared governance. Leaders from health, human services, and community organizations made joint decisions.

Meanwhile, California’s efforts to update its Medicaid systems have faced significant and ongoing challenges, including bureaucratic issues, legal uncertainties, and fragmented policies, which have historically hindered progress. If leadership teams do not collaborate across agencies, AI will not unify systems and may instead amplify the dysfunction of existing systems.

The Future Belongs to People-First Leaders Who Use The Determinants of AI Wisely

AI should amplify humanity, not erase it. The next generation of successful HHS organizations will view AI as a leadership multiplier, not a replacement. AI will not replace public health nurses, social workers, or case managers. It will empower them when leadership provides the necessary tools, training, and trust.

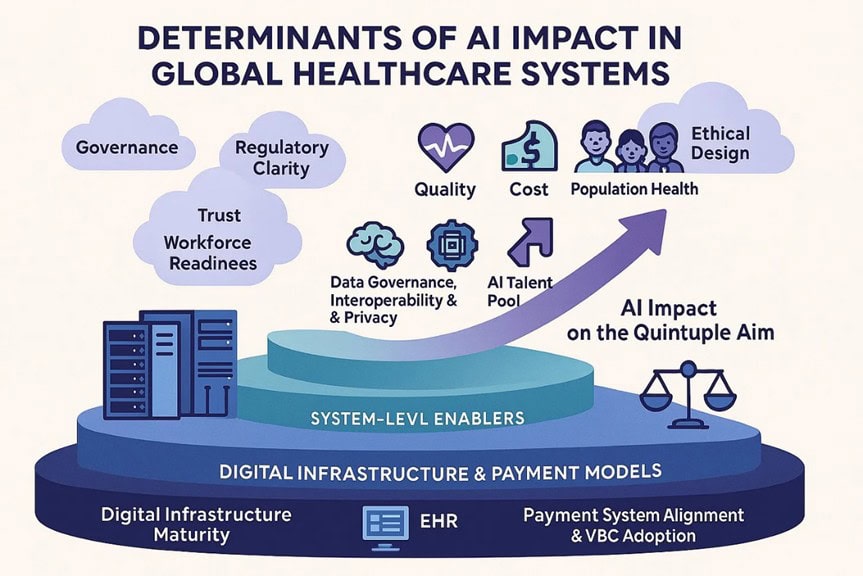

The framework below mirrors the crisis unfolding across U.S. Health and Human Services and world-wide systems: AI impact depends not on algorithms but on governance, data quality, and trust. His model shows three stacked layers—digital infrastructure and payment models (EHR maturity and value-based care alignment), system-level enablers (data governance, privacy, interoperability, and AI talent), and the policy-culture layer (regulation, ethical design, and workforce readiness).

Together, they determine whether nations reach the “AI Impact on the Quintuple Aim” of better quality, cost, population health, workforce well-being, and equity.

For HHS executives, the visual is a warning: weak infrastructure and fragmented payment systems choke innovation. Even the best algorithms collapse without interoperability, leadership alignment, and ethical oversight. The same forces limiting AI success globally—poor governance, insufficient training, and mistrust—mirror the risks is AI initiatives.

For example, The CMS AHEAD (Achieving Healthcare Efficiency through Accountable Design) Model is an 11-year voluntary program (2024-2034) for states to make broad changes to healthcare delivery and payment by coordinating multiple payers, including Medicaid, Medicare, and at least one private payer. The CMS AHEAD and WISER Models link state and community health systems through predictive analytics and AI to address chronic disease and SDOH in real time. The difference is philosophical: leaders treat AI as a partner, not a product.

To succeed, HHS leaders must treat AI enablement as a system reform strategy, not a software rollout. Build governance boards that unify data, compliance, and ethics. Tie AI investments to value-based payment redesign. Create trust through workforce literacy and transparent audits. When governance, interoperability, and culture intersect, AI moves from hype to measurable impact—transforming fragmented programs into integrated, accountable community health systems.

Act Now or Risk Losing Leadership Relevance

Do not delay action. Successful HHS organizations act decisively and transform their systems before external disruptions occur. AI adoption is not solely a technology race; it is a test of management discipline. Leaders act by experimenting, auditing, learning from failures, and adapting quickly, while others delay progress.

Delays strengthen competitors’ models and give the private industry greater influence over public-sector objectives. Adapt promptly to remain relevant. Take ownership, lead change, and secure your organization’s future.

5 Actions Every Health Executive Should Take Right Now

- Step 1: Clean your data house. Within six months, audit, remove duplicates, and standardize all health and social data pipelines.

- Step 2: Build a cross-sector AI leadership team. Include your CIO, CMO, and community partners. Make accountability clearly shared.

- Step 3: Train for trust first. Actively embed frontline staff in AI design to create buy-in, as fear blocks innovation.

- Step 4: Audit for bias openly. Run quarterly reviews for bias by race, income, and geography. Publish clear results to build trust.

- Step 5: Invest in AI literacy. Require AI training for all staff, making it as essential as HIPAA compliance.

More: Leading Sideways! 4 Exciting Methods and 7 Critical Habits to Elevate Your Strategic Impact

Lead Change or Be Directed by Algorithms

AI is here, but most HHS organizations remain stuck, hoping algorithms will fix persistent problems. The sector requires courage from leaders who reclaim control from vendors, build cross-sector trust, and fix their data before launching new models.

This is a pivotal moment. Act now to shape the future of care, or risk having others determine your organization’s direction.

Decide now whether you are building an AI-ready organization or allowing your mission to lose relevance. Lead proactively.

Consider the discussion questions and learning activities, then check out these articles at the Strategic Health Leadership (SHELDR) website:

- 8 AI Breakthrough Moments Shaping AI in Healthcare: Eye-Opening, Powerful Lessons Every Strategic Leader Must Know (Part 1/3)

- 6 High-Impact, Game-Changing Opportunities for Smarter AI in Healthcare Every Forward-Thinking Leader Should Seize Today (Part 2/3)

- 8 Costly, Preventable AI Mistakes in Healthcare That Strong Leaders Can Still Fix Before It’s Too Late (Part 3/3)

Deep-Dive Discussion Questions

- How can AI governance models balance innovation speed with ethical and workforce safeguards in HHS organizations?

- What lessons from Nebraska Medicine’s AI-driven retention success can inform national workforce modernization efforts?

- How do payment system misalignments block AI-enabled transformation in Medicaid and public health systems?

- Where should data governance reside—within IT, compliance, or clinical leadership—for maximum organizational accountability?

- How can leaders overcome “AI hype fatigue” while still fostering curiosity and innovation within their teams?

- What are the leadership risks of delegating AI strategy to vendors without internal competency or cross-sector input?

- How can states use CMS AHEAD and CDC modernization models to close AI readiness gaps across agencies?

- What does “people-first AI” look like operationally in hospitals, public health, and human services environments?

Professional Development & Learning Activities

- AI Readiness Audit: Conduct a 30-minute assessment mapping data quality, staff literacy, and workflow integration readiness across departments.

- Bias in Algorithms Simulation: Review a real-world AI bias case study, identify governance failures, and propose mitigation strategies through leadership role-play.

- Leadership Ethics Roundtable: Facilitate a 45-minute discussion on ethical dilemmas of automating eligibility, triage, or case prioritization in social services.

- Cross-Sector Collaboration Workshop: Pair teams from hospitals, public health, and social services to design an AI-powered population health initiative.

- Trust-Building Lab: Create short scenario-based dialogues where staff challenge AI decisions and leaders model transparent, empathetic responses.

- AI Literacy Challenge: Organize a quiz-based session where participants explain key AI terms in plain language to non-technical peers.

A BONUS!

Case Study Title: Courage and Control: Building Strong AI Governance and Trust in Health and Human Services

Introduction: When Leadership Lags Behind Algorithms

What happens when technology evolves faster than the people trusted to manage it? How long can public trust survive when algorithms make decisions leaders can’t explain?

Generative AI has exposed deep fractures within the health and human services (HHS) ecosystem. While the United States spent $4.9 trillion on healthcare in 2023, only 15% of HHS organizations have scaled AI solutions beyond pilot projects (McKinsey, 2024). The CDC’s 2025 Digital Health Readiness Assessment found more than half of state health departments lack the capacity to integrate real-time data. Despite enormous investment, the gap between technical capability and leadership readiness continues to widen.

Problem Statement: The Leadership Gap in AI Governance

HHS leaders are introducing AI into fragile systems—without adequate governance, workforce literacy, or cross-sector accountability. As algorithms grow more complex, most organizations lack ethical guardrails, data governance frameworks, or oversight boards capable of auditing model behavior.

The core issue isn’t innovation—it’s leadership discipline. Without strong governance, AI amplifies the very dysfunction it was meant to fix: inequities, inefficiency, and distrust.

Background and Contributing Factors

The crisis in AI governance reflects seven interlocking failures:

- Data Fragmentation: CMS’s 2023 Medicaid Interoperability Audit showed fewer than 40% of states can reconcile eligibility, claims, and provider data within one quarter.

- Governance Gaps: Deloitte’s 2024 AI Governance in Health and Human Services report found that only 27% of public agencies have clear governance structures defining AI accountability.

- Cultural Resistance: Legacy leadership models prize compliance over innovation, slowing adaptation to new tools.

- Workforce Unreadiness: WHO’s 2023 AI for Health report emphasized that workforce literacy, not technology, determines AI success.

- Vendor Overreach: Overreliance on external vendors limits internal control over model training, data privacy, and ethical standards.

- Bias in Data: A 2022 Science study revealed that one major insurer’s AI tool underestimated care needs for Black patients by 45% due to biased data.

- Siloed Oversight: The OECD’s 2024 AI Governance Frameworks in Healthcare stressed that HHS agencies still lack cross-department oversight linking IT, clinical, and ethics teams.

Together, these failures represent what Harvard faculty describe as a “governance void”—a structural absence of accountability where leadership must exist but doesn’t.

Consequences of Inaction

If this governance void persists, the damage will be systemic:

- Reputational Collapse: Public trust in AI-driven systems declines after every algorithmic failure.

- Ethical Breaches: Without review boards, biased models can perpetuate discrimination in eligibility or service prioritization.

- Financial Waste: Billions are spent on pilots that never scale.

- Moral Injury: Frontline staff forced to “trust the system” lose confidence in leadership.

- Loss of Life: Misguided automation in clinical or benefits decisions can result in real harm.

Governance failure doesn’t just erode data—it erodes humanity in leadership.

Three Alternative Actions

1. Build a Unified AI Governance Framework

Action: Establish an enterprise-wide governance model linking ethics, data science, compliance, and operations.

Benefits: Builds transparency, standardizes accountability, and ensures algorithms align with human-centered values.

Risks: Implementation requires cross-department coordination and political courage.

Leadership Imperative: Treat governance as a core clinical safety function, not an IT checklist.

2. Create Cross-Sector AI Oversight Boards

Action: Develop standing oversight bodies composed of healthcare leaders, data scientists, legal experts, and patient advocates.

Benefits: Promotes ethical consistency and shared accountability across HHS sectors.

Risks: Potential bureaucratic delays if not well managed.

Leadership Imperative: Shift from hierarchical decision-making to shared governance rooted in transparency.

3. Invest in Workforce AI Literacy and Culture Change

Action: Make AI literacy as mandatory as HIPAA training. Offer scenario-based learning that links AI to real-world ethics, trust, and safety.

Benefits: Builds staff confidence, reduces fear, and prevents misuse of automation.

Risks: Requires upfront time and training costs.

Leadership Imperative: Technology follows culture. Train minds before machines.

Conclusion: Leading AI with Courage and Accountability

AI governance isn’t about control—it’s about stewardship. The HHS sector can’t outsource moral responsibility to machines or vendors. Strong leadership must connect ethics, operations, and data integrity.

The leaders who act now—auditing bias, empowering cross-sector governance, and prioritizing workforce literacy—will define the future of trusted AI in health and human services. Those who wait will find themselves governed by their own neglect.

Discussion Questions

- What distinguishes effective AI governance from IT compliance?

- How can leaders balance innovation speed with ethical accountability?

- What governance structures could prevent bias in AI-based eligibility or care algorithms?

- How can HHS leaders ensure vendors remain accountable for data integrity?

- What leadership traits most determine successful AI adoption?

- Is transparency an ethical requirement or a competitive advantage?

- How should success in AI governance be measured?

Hashtags:

#StrategicHealthLeadership

#AIinHHS

#DataDrivenGovernance

#PublicHealthInnovation

#FutureReadyHealthcare