What will your board say when a patient’s death ties back to an unchecked algorithm?

AI is rewriting healthcare. Learn why leaders who master AI literacy, translation, and workforce growth will outpace rivals and safeguard patients.

Table of Contents

Executive Summary

AI isn’t some future gamble—it’s already transforming how we predict sepsis, flag drug interactions, cut no-shows, and protect high-risk seniors. Health leaders who master AI literacy, translation, and internal development won’t just avoid disaster—they’ll build safer, leaner, more trusted systems. Those who stall will bleed cash, widen care gaps, and lose community faith.

Now is the time to upskill, challenge algorithms, and build teams that make AI work for people, not just profits. Which future do you want on your watch? To learn more, use the links, questions, learning activities, and references.

Ignite Urgency: AI Storms Healthcare Now

Artificial intelligence isn’t looming on the horizon. It’s here. It’s moving through our health and human services systems like a high-speed train, rewriting how we deliver, pay for, and evaluate care. Yet too many strategic health leaders still act like bystanders. They’re waiting for more money, greater clarity, and increased comfort. In the meantime, AI is reshaping the way healthcare is done.

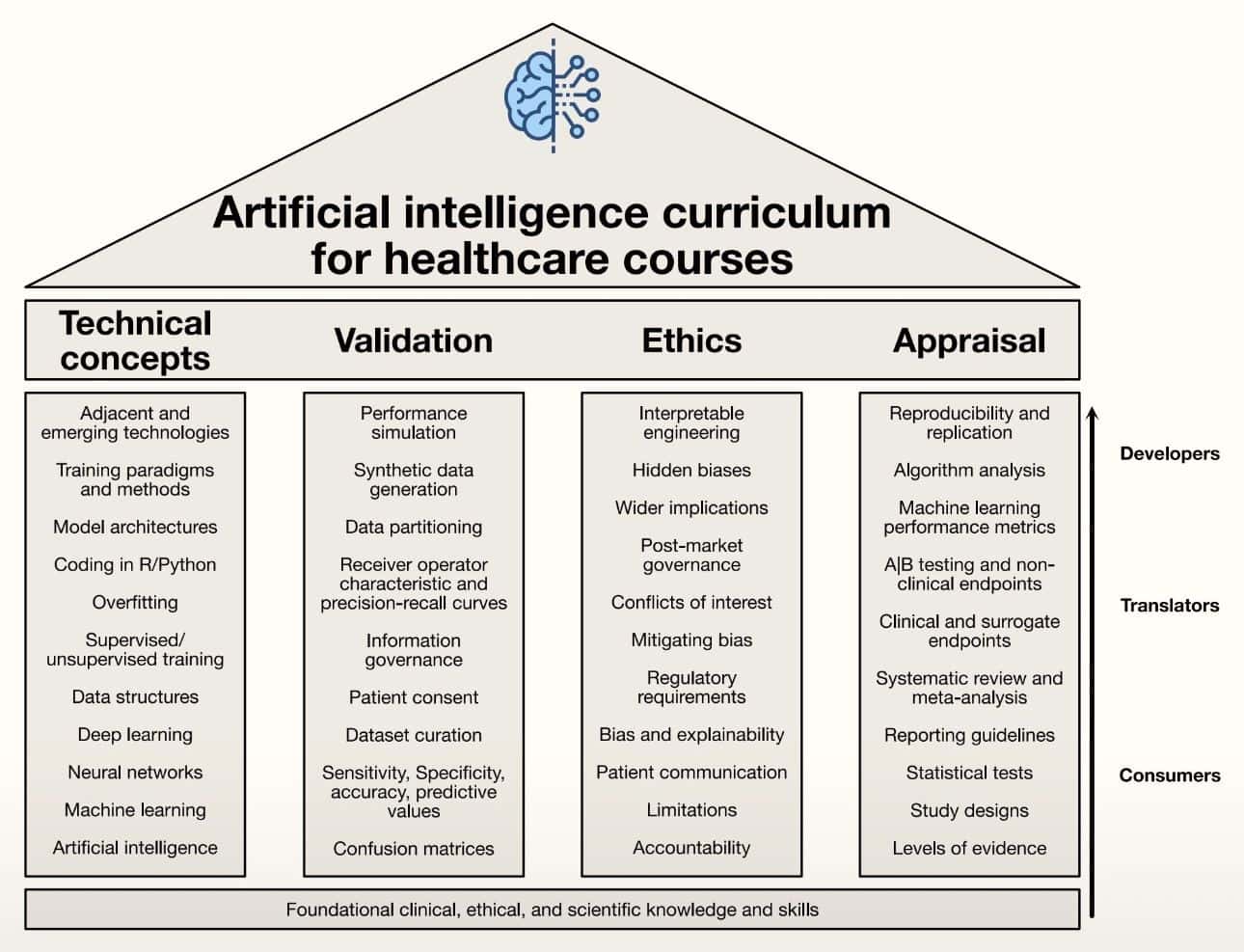

A landmark 2023 article and visual in Artificial Intelligence Education: An Evidence-Based Medicine Approach for Consumers, Translators, and Developers, it is out with precision. They argue for an AI curriculum in medical education that is grounded in evidence-based medicine.

Their model organizes learning into four pillars — technical concepts, validation, ethics, and appraisal — and sorts clinicians into three tracks: consumers, translators, and developers. It’s a sharp framework. However, if leaders fail to drive AI literacy now, it risks dividing our workforce into those who thrive and those left behind.

INSERT VISUAL

Grasp AI Fast or Watch Patient Safety Crumble

Let’s keep it plain. AI isn’t just robots or science fiction. It’s data-driven learning machines that can spot patterns, predict problems, and recommend actions faster than human eyes or minds can process. Machine learning, deep learning, natural language processing — they’re the engines behind AI’s power.

Here’s the bottom line. If you lead in healthcare, public health, or social services and can’t explain the basics of AI, your decisions will be vulnerable. You’ll be guessing about tools that could reduce harm, cut waste, or save lives.

Delay AI Mastery, Bleed Cash and Trust

Healthcare organizations can’t afford to sleep on AI. “AI is going to change everything in medicine. I just can’t tell how or when, but I know that AI is not going to replace doctors—but doctors who use AI will replace those who don’t,” AMA President Jesse M. Ehrenfeld, MD, MPH, warned at ViVE in Los Angeles.

This isn’t just about fancy tech or prestige — it’s about real consequences for patients, staff, and budgets. When leaders fail to drive smart adoption, the consequences are far-reaching.

Widening disparities and slower innovation are already here. A recent Frontiers in Medicine study found that hospitals serving mostly Black patients are 10% less likely to use advanced AI for early sepsis detection. That drag doesn’t just stall new tools; it cements unequal outcomes.

Meanwhile, operational waste balloons. Forbes reports that administrative complexity costs the U.S. health system over $250 billion a year. AI could easily shoulder eligibility checks, prior authorizations, or denial appeals. Without leaders who know how to deploy these systems, the bleeding doesn’t stop.

Trust also takes a hit. When the public hears that an algorithm misclassifies patients, who stands up to explain the guardrails in place? During the COVID-19 pandemic, one model incorrectly identified 81 hospitalized patients as “home care” candidates. Of those flagged by AI but hospitalized by doctors, 76.5% did fine.

A leader who understands model bias and governance can steady nerves and drive fixes. One who doesn’t? They fuel suspicion. Leaders who master AI fundamentals don’t just chase shiny tech — they protect communities, shrink waste, and close gaps. Those who don’t will watch disparities widen, trust vanish, and costs continue to climb.

Seize 3 Core AI Skills or Risk Leadership Failure

AI isn’t a distant disruptor — it’s already transforming how health systems triage patients, detect fraud, predict deterioration, and manage paperwork. Leaders who stay passive will watch their missions splinter under the weight of unchecked risk, wasted dollars, and public backlash. The new health leadership mandate? Become fluent, translate wisely, and develop internal AI expertise.

Master AI Fluency or Get Outsmarted by Algorithms

A Stanford Medicine survey found that only 27% of health leaders felt confident in explaining AI’s strengths and limitations to their boards. AI fluency isn’t coding in Python. As Dr. Ziad Obermeyer of Berkeley warns, “If you don’t know how it works, you don’t know how it fails. And in health, that’s not acceptable.” It’s knowing what machine learning does well, where it breaks, and how to challenge outputs.

NYU’s Division of Applied AI deployed predictive models for sepsis, deterioration, and mortality risk, all aiming to intervene hours earlier than standard protocols. Foundational literacy must be as routine as reading a balance sheet or tracking infection rates.

Translate Bedside Insight or Face AI Disasters

Good translators save lives and money. I once reviewed a vendor’s heart failure model that wrongly flagged older women as low risk because the data leaned male. Without clinical oversight, women would’ve waited too long for care. Translation means linking bedside insight to model design, knowing when AUC matters more than sensitivity, and spotting data pitfalls before regulators do.

As Dr. Vindell Washington says, “If your leaders can’t probe a confusion matrix, they can’t protect your patients.” Intermountain Healthcare utilizes AI-based systems (e.g., the 3M CDI Engage platform) at the point of care to flag documentation issues and provide clinical insights, thereby improving quality and safety, including the identification of potential drug interactions.

Without translation skills, hospitals court avoidable harm and steep fines.

Forge In-House AI Talent, Slash Outsider Control

Who builds and improves these systems? If it’s only vendors, your mission drifts. The Mayo Clinic reduced ICU length of stay by 16% with the help of an in-house AI command center. Blue Shield of California reduced claims rework by 40% through internal audits. UPMC utilized AI tools like Abridge to summarize clinician-patient conversations and integrate notes into the EHR, thereby reducing administrative burden and streamlining scheduling workflows.

SCAN Health Plan, one of the nation’s largest not-for-profit Medicare Advantage health plans, launched its first phase of artificial intelligence (AI) based predictive models designed to improve health outcomes and inform benefit and service design. This implementation will enhance SCAN’s ability to identify high-needs members and provide targeted interventions to help prevent or reduce hospitalizations.

Ignore AI? Watch Errors, Fines, and Scandals Soar

Picture two futures. In one, your team stays clueless. Vendors sell black boxes. Clinicians reject tools they don’t trust. Errors climb. Regulators circle. Community trust evaporates.

On the other hand, your team builds skills steadily. They vet algorithms for bias, pilot tools tied to real needs, and talk openly with patients. You drive efficiency, protect lives, and build credibility.

| Checklist to Get Moving | Mistakes to Avoid | Quick Wins You Can Start Today |

| Run an AI literacy audit. Who knows the core terms? Who can read validation curves? | Treating AI as IT’s problem. Address this issue by integrating it into clinical and public health workflows. | Host a lunch-and-learn on confusion matrices or A/B testing. |

| Build tiered learning paths. Consumers learn basics, translators tackle advanced modules, and developers get hands-on data training. | Waiting for perfect evidence. Pilot small, learn fast, adjust. | Assign teams to map one admin process ripe for RPA. |

| Tie learning to operations. Make AI fluency as mandatory as HIPAA refreshers. | Skipping ethics training. Teach bias detection and patient consent right alongside the tech. | Add an AI module to your next leadership retreat. |

Health leaders who master AI fundamentals won’t just avoid failure — they’ll steer their systems to safer, leaner, more trusted care. Wait too long, and someone else’s algorithm will decide your fate. As Dr. Isaac Kohane put it, “If you’re not pushing yourself here, you’re like those grads in 1990 who never bothered to learn word processors — already behind the curve, missing the tools everyone else is using to get real work done.”

Which future do you want on your watch?

Act Now: Secure Safer, Leaner, Trusted AI Care

AI is already determining who gets flagged for sepsis, who receives case management, and who falls through the cracks. Leaders who master AI literacy, demand transparency, and build internal expertise won’t just avoid scandals—they’ll deliver faster, safer, fairer care. Those who delay will see costs surge, trust erode, and their missions steered by opaque algorithms. How will you answer when your patients and community demand to know why you let outsiders drive critical decisions?

Consider the discussion questions and learning activities, and then explore the articles on the Strategic Health Leadership (SHELDR) website.

Propel Yourself Upward: The New 80/20 Rule Meets ChatGPT as A Strategic Health Leader’s Game-Changer

Deep Dive Discussion Questions

- Where should health systems draw the line between relying on vendor AI tools and building their internal expertise?

- How can you justify AI education spend to a board that only sees immediate ROI?

- What’s your response plan if a local journalist uncovers racial bias in an algorithm your organization uses?

- Which AI metrics (AUC, sensitivity, F1) matter most for high-risk clinical decision tools — and why?

- How could tiered AI training pathways reduce both workforce anxiety and patient harm?

Professional Development and Learning Activities

- Decode AI Bias Drill: Review a published case of algorithmic bias. Identify where it failed, who was harmed, and propose three immediate safeguards.

- Build an AI Fluency Brief: Assign teams to create a one-page explainer on machine learning basics for your board. Present it in under five minutes.

- Shadow and Translate Day: Pair clinicians with data analysts for a shift. Capture three ways bedside insight can shape AI model design and validation.

Hashtags

#AIHealthLeadership

#HealthcareInnovation

#ReduceHealthWaste

#TrustworthyAI

#FutureOfCare