Are you flying your GenAI mission—or watching it crash from the tower?

Discover how to avoid the top GenAI leadership traps—from trust collapse to compliance chaos. SHELDRs must lead from the cockpit.

Table of Contents

Executive Summary

Generative AI promises to transform health and human services—but without trust, control, and compliance, it will collapse on takeoff. This chapter lays out the real-world dangers of poor leadership in GenAI rollouts and offers SHELDRs a strategic path forward. From fixing broken referral systems to leading with cockpit-level control, the mission is clear: fly it, don’t float it. Learn why AARs, quick wins, and privacy-by-design aren’t extras—they’re the difference between scaling and imploding. To learn more, use the links, questions, learning activities, and references.

Why GenAI Is a Strategic Leadership Risk

The Health and Human Services (HHS) sector is not prepared for the scale of disruption that GenAI is about to unleash. The July 2025 Harvard Business Review article, “Will Your GenAI Strategy Shape Your Future or Derail It?”, cuts deep: most GenAI failures stem not from poor technology, but from ineffective leadership. The piece warns of an all-too-common trajectory—organizations rushing to scale GenAI solutions before delivering any trust-building wins, losing grip on compliance, and underestimating the cultural shock to teams and communities alike.

The stakes are even higher for SHELDRs—Strategic Health Leaders—tasked with implementing AI-powered care coordination systems that span clinics, hospitals, nonprofits, public health, and social services. You’re not just deploying software. You’re trying to rewire how fragmented systems talk to each other, make decisions, and deliver care. Fail here, and you set back trust in both AI and the entire MAHANSI movement—Make All Americans Healthier as a National Strategic Imperative.

Let’s not sugarcoat this. Just like the movie, you are flying Strategic Air Command-level missions with million-dollar payloads, except your fuel is public trust, your payload is human health, and your crash site is the community.

When AI Fails the Most Vulnerable—And You’re Liable

Funding has been secured. The vendor is prepared. The AI referral system is designed to analyze social determinants of health (SDOH), link clients with local services, and facilitate automated warm handoffs between health and social care sectors. It appears to be an ideal scenario. Until failure occurs.

Your frontline teams stop trusting the recommendations. A flawed match directs a vulnerable client to the wrong housing agency. Someone gets hurt. Word spreads. Your partner organizations pull back. The local press refers to it as another “AI overreach.” Compliance officers start digging through logs. A brilliant solution becomes a reputational and regulatory firestorm.

What failed? Not the model. Not the ambition. You were unable to establish trust, enforce control, and maintain compliance from day one.

3 Unbreakable Rules for Leading Health AI

Control Is a Leadership Function, Not a Tech Feature: The illusion of control has killed more systems than destructive code. SHELDRs must set the rules of engagement before the first pilot goes live. That means human-in-the-loop policies, role-specific permissions, auditable prompts, and fail-safe escalation paths.

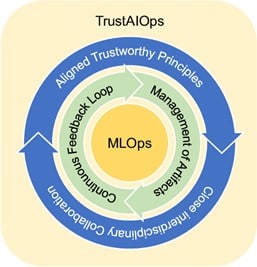

To build trustworthy AI, health leaders must go beyond basics of CQI. It’s not enough to deploy models—you need a continuous operational workflow that aligns with core principles of trust, safety, and transparency. That’s where TrustAIOps comes in. It extends traditional CQI by embedding continuous feedback loops, real-time artifact tracking, and cross-disciplinary collaboration from day one. The focus shifts from model deployment to responsible, adaptive system management—built to earn trust and withstand scrutiny. For example, when a model indicates a referral with high urgency but the clinician disagrees, it is essential to have documentation, override, and rationale fields integrated into the platform to substantiate this decision.

SHELDRs must integrate that thinking into all operational workflows.

Trust Isn’t Given—It’s Built Through Wins, Not Demos: HBR’s warning is clear: GenAI doesn’t earn credibility from pitch decks. It earns it from small wins in real-world settings. Demonstrate, monitor, and disseminate the results if your AI system decreases referral wait times or identifies unmet needs more rapidly than a human.

These performance measures enhance the confidence of frontline staff and communities in the system. Quinn et al. (2020) highlighted that trust in medical AI is established through experience and expertise rather than through the excitement surrounding automation. Accreditation-style benchmarks should accompany AI solutions to validate their utility and safety.

Expert consensus reinforces this point. A 2025 Journal of Medical Internet Research article documented how healthcare workers trusted AI tools more when outcomes were visible, repeatable, and aligned with lived patient experiences (JMIR, 2025).

Compliance Must Be Baked In—Not Retrofitted After Deployment: Retrofitting compliance is like gluing armor to a fighter jet mid-flight. You’ll crack the fuselage. The only acceptable path is compliance-by-design: privacy, security, equity, accessibility, and consent woven into the AI from the start. That includes data minimization, HIPAA audit trails, and explainable outputs.

Momani (2025) highlighted serious failures in health data governance when GenAI tools ingested protected health information without appropriate purpose limitation or informed consent. The lesson? Start with privacy engineering—don’t slap it on later.

And don’t overlook regulatory direction. The Harvard Business Review article “How to Regulate Generative AI in Health Care” (HBR, 2024) makes the case for a new compliance architecture to address the black-box nature of GenAI.

GenAI Leadership Isn’t Ground Control—It’s Cockpit Work

In the movie, Strategic Air Command (1955), Jimmy Stewart plays a bomber pilot recalled into service to help transition the military into the jet age. He’s not flying drones. He’s flying high-stakes missions that require skill, endurance, and complete control. That’s the leadership mindset GenAI demands.

Many health leaders today act like tower controllers—approving AI systems but remaining detached from their actual deployment, testing, and consequences. That’s dangerous. SHELDRs must be cockpit-level leaders. Involve yourself in every After Action Report (AAR). Walk the floors. Ask real questions.

If your frontline team doesn’t trust the tool, you won’t be able to scale the solution.

3 Critical Skills Every AI-Savvy SHELDR Needs

Too many health leaders confuse AI literacy with coding. That’s a costly mistake. The real edge lies in operational fluency—knowing how models behave, where errors creep in, and when to override the system. But literacy alone isn’t enough. GenAI touches every layer of health delivery, finance, and public perception. Leaders must see the system, not just the tool. And compliance? It’s not a box to check. It’s a defensive strategy built in, not bolted on, from the very first day.

Operational AI Literacy: This doesn’t mean writing Python. It means understanding confidence scores, data provenance, retraining cycles, and error boundaries. Quinn et al. (2020) argued that without operational fluency, leaders can’t challenge AI outputs—or vendor overclaims. Know what can go wrong and what you can override.

Systems Thinking: GenAI doesn’t work in a silo. It touches everything—referrals, quality metrics, reimbursement, community outcomes. You must anticipate where political, ethical, and fiscal risks intersect. Li et al. (2021) framed this as “building organizational fluency in AI’s systemic effects.” Think like a regional commander—not a feature buyer.

Compliance-by-Design Engineering: Lead the design conversations with legal and ethics advisors from day one. Use Momani’s 2025 HIPAA compliance checklists. Red-team your referral workflows. Simulate harm. Then build defenses. Explainable outputs and override logic aren’t just lovely—they’re non-negotiable.

Strategic AI leadership demands more than enthusiasm. It requires deep operational literacy, systems-level thinking, and compliance-by-design engineering. Leaders who fail to master all three risk putting their patients, teams, and reputation in harm’s way—and won’t see the failure until it’s too late to stop the damage.

Now let’s examine how to apply these competencies in real-world deployments.

How to Use GenAI to Power the MAHANSI Era

To Make All Americans Healthier as a National Strategic Imperative (MAHANSI), Generative AI must be used with discipline, direction, and hard limits. This isn’t about flashy apps or optimistic demos. It’s about making GenAI work for the people who usually fall through the cracks—safely, visibly, and under control. If SHELDRs want to move from pilots to sustained impact, they must lead from the cockpit, not the conference room.

The wrong approach is painfully familiar: launch fast, lean on vendor metrics, ignore user feedback, and react only after something goes wrong. That model not only derails AI—it destroys public trust and stalls the MAHANSI agenda. If the first referral mistake hits the front page, you’re already playing defense.

SHELDRs must flip the script. Start with one visible quick win—then make it repeatable. Pick a use case with high volume and low complexity, where GenAI can help clinicians and care navigators act faster and with better information. Measure it, improve it, and let frontline teams talk about what worked.

From there, impose rigorous operational control. No system gets deployed without clear escalation paths, override logic, and built-in auditability. That’s not optional—it’s airworthiness.

And compliance? Design it in like armor plating. Bring in legal and ethics teams before procurement. Test the system like it’s already under investigation. That’s what it means to lead GenAI in the MAHANSI era.

Treat GenAI like Strategic Air Command treated bombers: high-stakes assets flown by trained leaders with total mission ownership. Never autopilot. Never outsourced. Always under human command.

That’s how you use AI to make Americans healthier—not in theory, but in practice.

Treat GenAI Like a Combat Mission, Not a Vendor Contract

Strategic Health Leaders (SHELDRs) face a stark choice. Deploy GenAI with precision and purpose—or let it crash and take your credibility with it. This chapter walks through real-world dangers of AI referral failures, compliance gaps, and trust collapses. Vendors won’t save you. You’re the pilot. You own the mission. If you haven’t built control mechanisms, trained your teams, and hardened compliance from Day 1, you’re already at risk.

Stop chasing flashy demos. Start delivering documented wins. Create a loop of transparency and feedback. And above all—be the one who flies the mission, not the one blamed when it fails.

Consider the discussion questions and learning activities, then checkout these articles at the Strategic Health Leadership (SHELDR) website:

Question: What would your community say about your GenAI system—if they saw the last 10 referral decisions?

- Elite AI Leadership: 7 Irreplaceable Non-AI Soft Skills in the Health And Human Services Workplace

- 3 Soft Skills to Fuel Grit, Lead Boldly, Master AI

- 9 Deadly Health AI Governance Gaps That Invite Siege

Deep Dive Discussion Questions

- Which early signs of AI failure get missed in your referral system—and who’s accountable for detecting them?

- How are you quantifying “trust” from frontline teams and community partners during AI rollout?

- What regulatory gaps in your GenAI model could trigger audits or legal action today?

- What does “control” mean operationally—who can stop, override, or question AI decisions in real time?

- How do your AARs integrate real user feedback, and how often are leadership decisions adjusted because of them?

Professional Development & Learning Activities

- Red Team the Referral: Review your referral AI logic in a live tabletop. Identify and log all failure points.

- Build a Trust Dashboard: Design a dashboard that tracks AI errors, user overrides, and community complaints in real time.

- Simulate a Compliance Breach: Conduct a simulation where AI mishandles PHI. Practice the response—from comms to regulators.

Videos

- Should AI be used in health care? Risks, regulations, ethics …

- Global AI Regulation: Health AI and the Future Expectations in ..

- Trustworthy AI in Healthcare

- With AI, ‘the only way to build trust is to earn trust’

References

- Quinn T, Challen R, Khan A, et al. Trust and Medical AI: The Challenges We Face and the Expertise Needed to Overcome Them. arXiv. 2020. https://arxiv.org/abs/2008.07734

- Li J, Cui R, He Y. Trustworthy AI: From Principles to Practices. arXiv. 2021. https://arxiv.org/abs/2110.01167

- Momani A. Implications of Artificial Intelligence on Health Data Privacy and Confidentiality. arXiv. 2025. https://arxiv.org/abs/2501.01639

- Blumenthal, David, Pate, Bakul How to Regulate Generative AI in Health Care. Harv Bus Rev. September 2024. https://hbr.org/2024/09/how-to-regulate-generative-ai-in-healthcare

Hashtags

#GenAILeadership

#AIComplianceCrisis

#HealthSystemTrustGap

#StrategicAIExecution

#SHELDRinCommand